AI has advanced significantly and multimodal AI is driving its evolution. Multimodal AI processes multiple data types simultaneously — such as text, images, video, and audio — making decision-making more accurate. This accuracy results in human-like interactions and improves systems’ context-aware performance.

It’s becoming a prominent tool in healthcare, finance, manufacturing, automotive, and many other sectors that use advanced data analysis and precise, actionable insights.

Multimodal AI’s impact on industries can be reflected in its growing market value. According to Grand View Research, the global multimodal AI market is expected to reach $10.89 billion by 2030. With exponential integration across diverse fields that demand AI models capable of interpreting and acting on multiple data types concurrently, multimodal AI is becoming integral.

Let’s explore how multimodal AI functions and its practicality across industries.

What is Multimodal AI?

Multimodal AI is a class of artificial intelligence systems designed to process and analyze multiple data types simultaneously.

Rather than relying on a single data source, such as text or images, multimodal AI integrates inputs like natural language, visual content, audio signals, and sensor data to produce precise and context-aware insights.

This capability allows AI systems to address complex scenarios by synthesizing data from different sources to provide actionable outcomes.

Technical Architecture

Multimodal AI uses advanced deep learning frameworks such as convolutional neural networks (CNNs) for image recognition, recurrent neural networks (RNNs) for sequential data processing, and transformer models to handle complex text analysis.

These models use techniques like attention mechanisms to focus on key data points across modalities, and tensor fusion to align and process different types of data inputs in parallel. This alignment across modalities enables multimodal AI to process data cohesively for accurate, real-time predictions and decisions.

Functional Execution

Multimodal AI boosts operational capabilities through integrated, context-driven responses.

For instance, in an autonomous driving system, multimodal AI processes input from LIDAR sensors, radar, visual cameras, and audio signals to assess traffic conditions, detect obstacles, and adjust driving actions instantly.

The system can interpret each modality in real-time and synchronize responses for safe navigation. The ability to simultaneously process this diverse range of inputs gives multimodal AI systems an edge in industries that rely on real-time, complex data streams.

Application in Real-World Use

Multimodal AI applies in several industries with diverse data streams and a need for rapid response systems.

In healthcare, it integrates medical imaging, patient history, and biometric data for enhanced diagnostic capabilities. In manufacturing, it improves predictive maintenance by merging sensor readings, visual inspections, and historical machine performance data to detect and prevent equipment failure.

These systems are built on the technical prowess of teams with deep expertise in deep learning, machine learning, and neural network engineering.

- Build Your Custom Multimodal AI Solution

- Leverage our expertise to create AI systems that combine text, images, and more for seamless automation and decision-making.

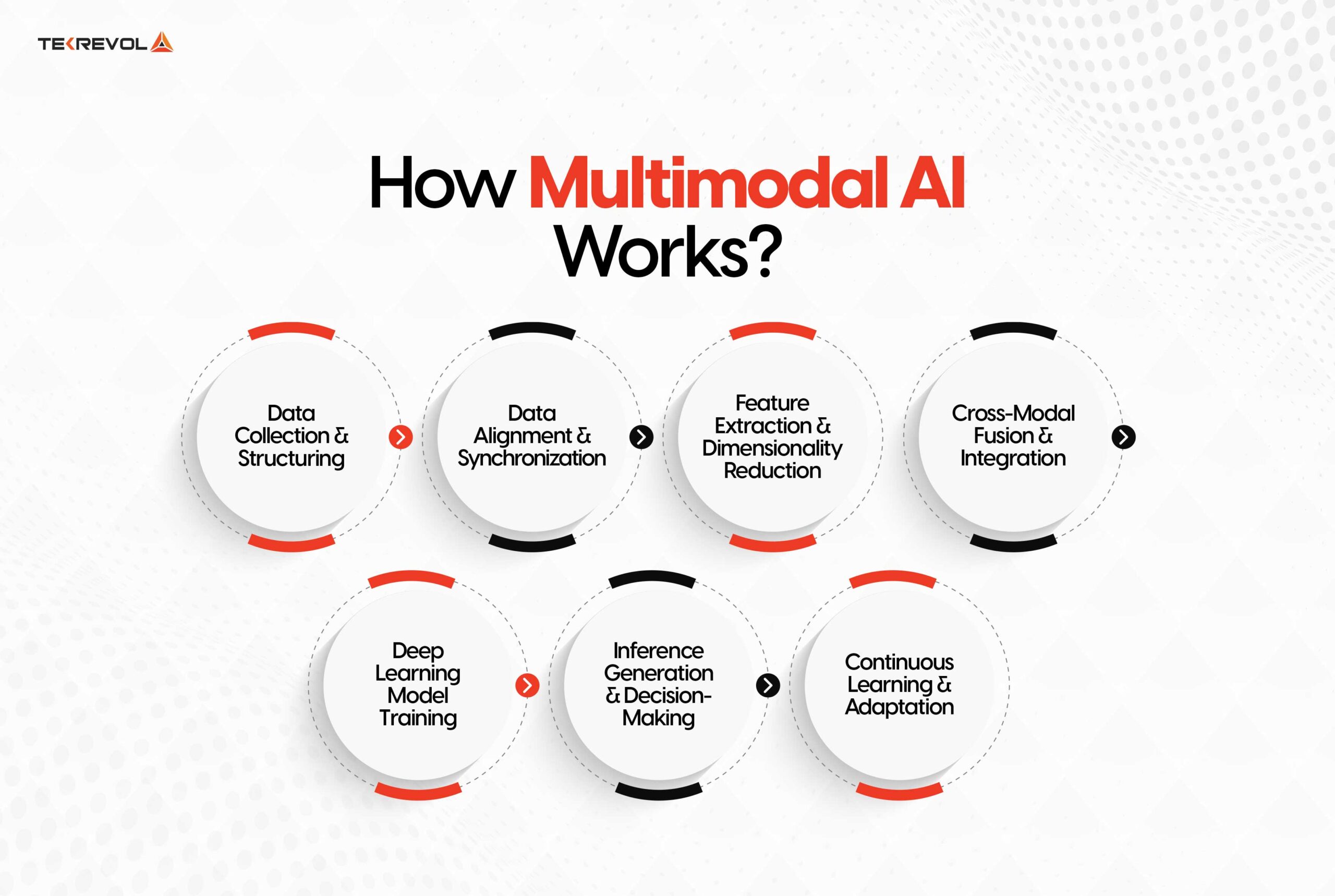

How Multimodal AI Works? – A Technical Overview

Multimodal AI merges data from different modalities to provide a holistic view and make decisions based on a unified interpretation of that data. The technology’s core lies in its ability to process and synchronize different data types in real-time.

Here’s a step-by-step breakdown of how multimodal AI works:

-

Data Collection & Structuring

Multimodal AI begins with collecting data from various sources: natural language input (text), image/video feeds, and audio signals. Each modality undergoes a preprocessing phase, where structured formats are applied to ensure data compatibility. For text, tokenization and embedding techniques such as BERT or Word2Vec are used, while images and videos undergo feature extraction using CNNs (Convolutional Neural Networks).

-

Data Alignment & Synchronization

Aligning diverse data sources is essential for cohesive AI models. The system synchronizes inputs across modalities. For example, video frames and their accompanying audio are aligned with relevant text transcripts or metadata. Tensor fusion or bilinear pooling techniques align these different data points within a common semantic space.

-

Feature Extraction & Dimensionality Reduction

Once synchronized, the AI extracts key features from each modality. For text data, NLP models extract semantic meaning, while images undergo object detection via CNNs. Audio signals are analyzed using spectrograms for sound pattern recognition. Techniques like principal component analysis (PCA) or t-distributed stochastic neighbor embedding (t-SNE) are used to reduce data dimensionality, ensuring efficient processing without loss of critical information.

-

Cross-Modal Fusion & Integration

This is where the strength of multimodal AI lies. The system integrates features from various data sources through deep multimodal fusion. Fusion can occur at the early stages (combining raw data) or late stages (combining decision outcomes). A hybrid approach often delivers the best results, merging both raw data and model inferences. This enables the system to consider all inputs in a meaningful way to create a unified model output.

-

Deep Learning Model Training

The AI system is then trained using multimodal transformers, recurrent neural networks (RNNs), or temporal convolutional networks (TCNs). Each modality contributes to the model’s learning process. Cross-modality attention mechanisms ensure that the model weighs each input appropriately, refining its predictions and enhancing the model’s ability to generalize across diverse scenarios.

-

Inference Generation & Decision-Making

Once trained, multimodal AI models can analyze new data and deliver high-precision insights. This stage involves leveraging learned patterns to make informed decisions, drawing on each input type. For example, in healthcare, the model could analyze patient data, medical imagery, and doctor’s notes to provide diagnostic recommendations or potential treatments.

-

Continuous Learning & Adaptation

Multimodal AI systems are continuously updated with new data to ensure they remain accurate and relevant. Reinforcement learning mechanisms allow the system to adapt, improving performance over time and applying new learning to improve future inferences.

- Launch Your AI MVP in Weeks

- Get a rapid, scalable MVP powered by multimodal AI. Automate workflows and streamline processes faster than ever.

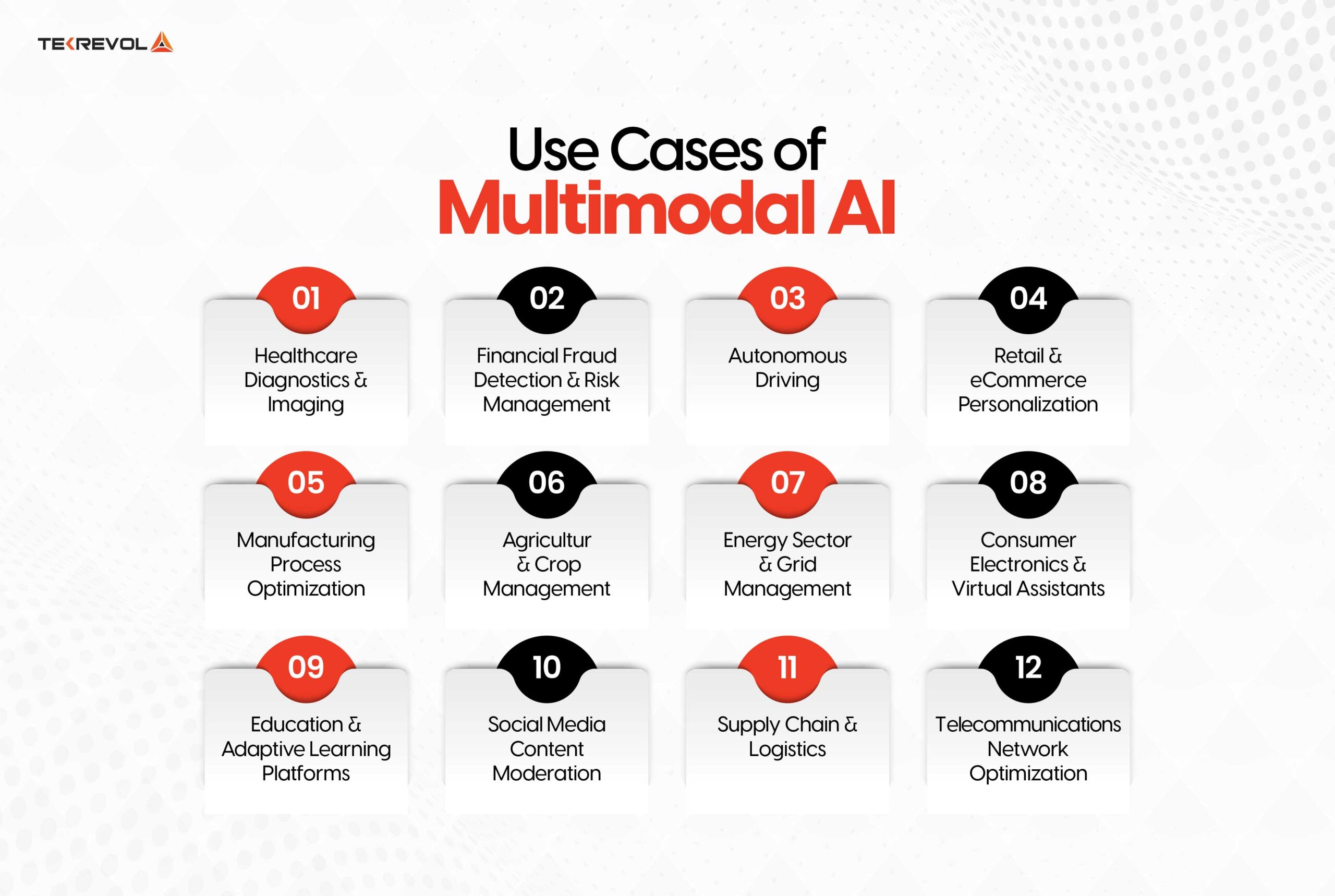

12 Industry-Specific Use Cases Of Multimodal AI

Multimodal AI can help manipulate complex source data and optimize operations across different industries and sectors. Multimodal AI solutions are used in a wide range of applications, including diagnostics in the healthcare industry and predictive analysis in the manufacturing industry to provide accurate and detailed information to improve performance.

Here are the 12 best real-life examples of how multimodal AI is enhancing operations across industries due to its technical flexibility, mass production readiness, and sophisticated data processing and management traits.

-

Healthcare Diagnostics & Imaging

In healthcare, multimodal AI integrates MRI scan data with X-ray data, patient history, and live vitals. Diagnostic images are fed to AI models and patterns are extracted and matched with EHR to provide almost accurate diagnosis. Patients benefit from the improvement of an AI that incorporates notes and scans to make quick and accurate diagnoses, especially in oncology and radiology.

-

Financial Fraud Detection & Risk Management

Multimodal AI also helps in the detection of fraud by analyzing the data of transactions, interacting with customers, and their behavioral patterns.

Combining real-time transaction logs and customer communication implies that AI systems can identify suspicious activities that may not be detected. Multimodal systems can also incorporate sentiment analysis in the support interactions aimed at identifying stress signals regarding fraudulent issues.

-

Autonomous Driving

Multimodal AI is critically important for self-driving vehicles. These include camera feeds in adding to LiDAR, radar, and GPS data that allows for decisions that are made in real-time.

All of these inputs are fed into the driving models where they are processed at the same time to control movements on roads, object recognition, and future actions by pedestrians and other vehicles on the road. It helps AI systems to further improve route planning, and safety measures to prevent any possible collisions.

-

Retail & eCommerce Personalization

In retail, multimodal AI utilizes the purchase history, images, comments, and live browsing patterns to identify products to offer. Multimodal AI employed by eCommerce platforms such as Amazon helps to capture customers’ preferences across such modalities.

Based on the analysis of the use of images of products and the reviews provided by the users, the AI provides more relevant results, which improves the conversion rates.

-

Manufacturing Process Optimization

In smart factories, multimodal AI integrates data from IoT sensors, camera feeds, and production schedules to optimize workflow. The AI predicts machine failures by analyzing real-time sensor data and historical maintenance logs. Additionally, computer vision systems monitor product quality in real-time, identifying defects and alerting teams before issues escalate, which reduces downtime and operational inefficiencies.

-

Agriculture & Crop Management

In agriculture, multimodal AI processes drone imagery, weather data, and soil conditions to provide actionable insights for farmers. For example, AI systems use satellite data and environmental sensor inputs to monitor crop health, assess irrigation needs, and predict yield outcomes. Precision agriculture is increasingly reliant on multimodal models to optimize resource usage and improve crop yields.

-

Energy Sector & Grid Management

Energy companies employ multimodal AI for grid optimization by analyzing sensor data, environmental conditions, and historical energy consumption patterns.

AI systems predict peak demand times, optimize energy distribution, and detect anomalies that might indicate equipment failures or inefficiencies. AI helps improve operational efficiency and predictively maintain grid infrastructure to minimize outages.

-

Consumer Electronics & Virtual Assistants

Devices like Amazon Alexa and Google Home rely on multimodal AI to process voice commands, detect contextual clues, and interact with users. These virtual assistants process real-time audio, text input, and user preferences to perform tasks such as setting reminders, playing music, or answering queries.

AI models trained on multimodal data help assistants understand nuanced voice commands, improving user interaction.

-

Education & Adaptive Learning Platforms

Educational tools use multimodal AI to create adaptive learning experiences. These systems integrate video lectures, student performance data, and interaction logs to personalize learning paths.

AI can suggest supplementary resources for students struggling with specific topics or accelerate content delivery for advanced learners. These systems continuously refine the student’s learning experience through data from quizzes, essays, and real-time feedback.

-

Social Media Content Moderation

Platforms like Facebook and Instagram use multimodal AI to moderate user-generated content. AI models detect harmful or inappropriate content in real time by analyzing text, images, and videos.

Social media platforms use multimodal systems to enhance user safety and optimize content recommendations by flagging inappropriate behaviors based on user preferences and interactions across multiple content types.

-

Supply Chain & Logistics

Multimodal AI streamlines supply chain operations by processing GPS data, traffic patterns, and inventory levels. AI systems integrate this data to optimize delivery routes, predict supply shortages, and adjust logistics planning.

AI helps warehouse supervisors analyze inventory data, shipment schedules, and customer demand forecasts to optimize stock levels and reduce delivery times.

-

Telecommunications Network Optimization

Multimodal AI helps optimize network performance in the telecom sector. It thoroughly analyzes signal strength data, real-time traffic patterns, and user device information to predict network congestion, optimize bandwidth allocation, and proactively resolve connectivity issues.

Using multimodal AI telecommunications providers can enhance call quality, reduce latency, and ensure consistent service across various geographical locations for better, consistent customer experience and operational efficiency. It also assists with predictive maintenance by identifying potential network failures before they impact users.

- Accelerate with Multimodal AI Integration

- Integrate multimodal AI into your existing systems for real-time insights, automation, and enhanced operational efficiency.

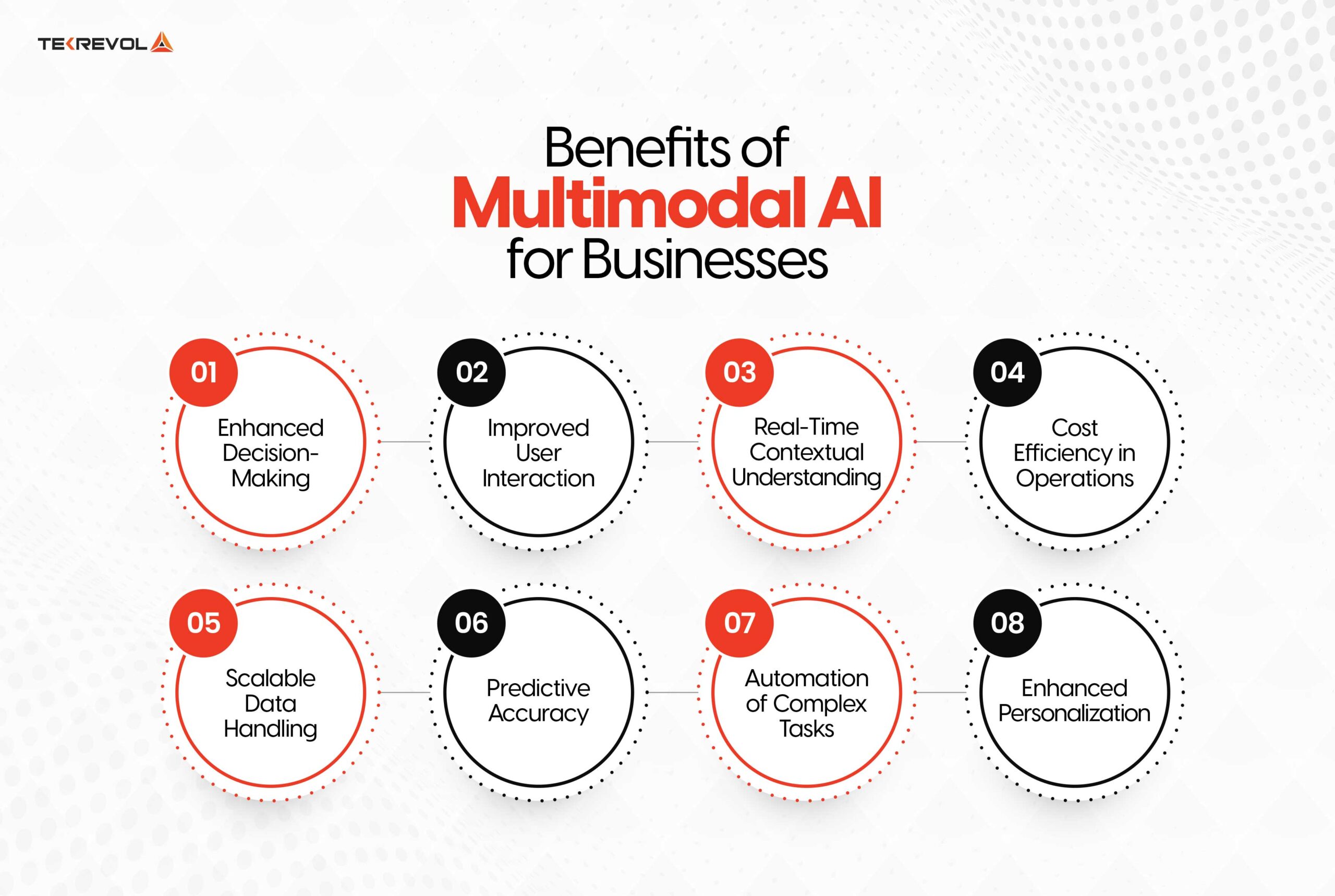

8 Benefits Of Multimodal AI

Multimodal AI integrates text, images, audio, and sensor data to create smarter, faster systems capable of handling complex tasks. This leads to more accurate results, real-time processing, and automation of intricate workflows.

Here are the key benefits multimodal AI offers across various industries.

-

Enhanced Decision-Making

Multimodal AI combines two or more modalities such as vision, sound, and textual data, which permits better decision making. For this reason, multilingual AI systems are beneficial as they process various forms of data at once and generate solutions that single-modality models cannot offer. This capability makes it valuable especially for industries in which decisions depend on several forms of data, such as self-driving cars and diagnostics of illnesses.

-

Improved User Interaction

Multimodal AI makes the user’s experience better because it takes into consideration voice, face, and hand gestures. This technology can assist in developing natural and intuitive user interfaces in voice-controlled services such as voice assistants, virtual reality applications, and customer support.

For instance, in smart home applications, it is possible to implement multimodal AI that can comprehend both spoken and gestural control at the same time which will allow it to perform more precise actions and provide better experience and feedback.

-

Real-Time Contextual Understanding

This ultimately allows for a better understanding of the context within the use of sensor data, environmental information, and real-time signals. This benefit is especially important in areas such as self-driving vehicles and robotics.

It is all possible because robots with multimodal AI can not only see but also sense and decide all at once using data from cameras and sensors, as well as the GPS. This real-time processing benefits functions such as manufacturing, logistics, and self-driving cars.

-

Cost Efficiency in Operations

Multimodal AI improves operational efficiency and saves costs across industries through process optimization. It can analyze the visual and sensor data in the manufacturing process to identify faulty instances in real time, thereby minimizing equipment breakdowns.

Likewise, in the healthcare sector, it becomes possible to combine patient documentation, MRI scans, and real-time data to diagnose a disease earlier, excluding the necessity to spend a great deal of money on further treatment. Multimodal systems enhance efficiency thus increasing ROI for businesses that adopt the technology.

-

Scalable Data Handling

Multimodal AI has the advantage of being capable of working with more than one type of data, making it a much better option to deal with big data. Multimodal AI proves useful for companies dealing with vast volumes of various data, like retail analytics, where it is possible to analyze purchasing patterns, sales, and customer opinions concurrently. It also exhibits an impressive ability to scale with data volume, making it a good option for companies that require growth at a fast pace.

-

Predictive Accuracy

The data fusion at the multimodal level enhances the accuracy of prediction. For example, in the process of financial forecasting, AI systems can input market patterns, news articles, and financial data to provide better stock estimates. With the help of this functionality, businesses can better understand the data provided and manage risks and opportunities effectively. This is useful in providing companies with a competitive advantage since it offers more accurate forecasts.

-

Automation of Complex Tasks

Multimodal AI is suitable in cases where there is a need to automate a task involving the comprehension of various data inputs. In particular, AI can help in the diagnostic process by analyzing X-rays, CT scans, and patient information in medical imaging, which are actions that require time and effort from healthcare professionals.

It can be applied for instance in retail where AI enables automation of certain tasks within the business such as using visual and sensor data to check on the inventory and forecast when replenishment will be needed.

-

Enhanced Personalization

Multimodal AI improves the effectiveness of recognizing and interacting with clients as compared to solely using keywords by identifying the voice, facial expressions, and behavior of the client. For instance, in e-commerce, it can correlate a user’s browser history with visual product preferences to offer recommendations. Engaging the client increases their satisfaction thus creating demand for products by recommending customized products based on multiple inputs.

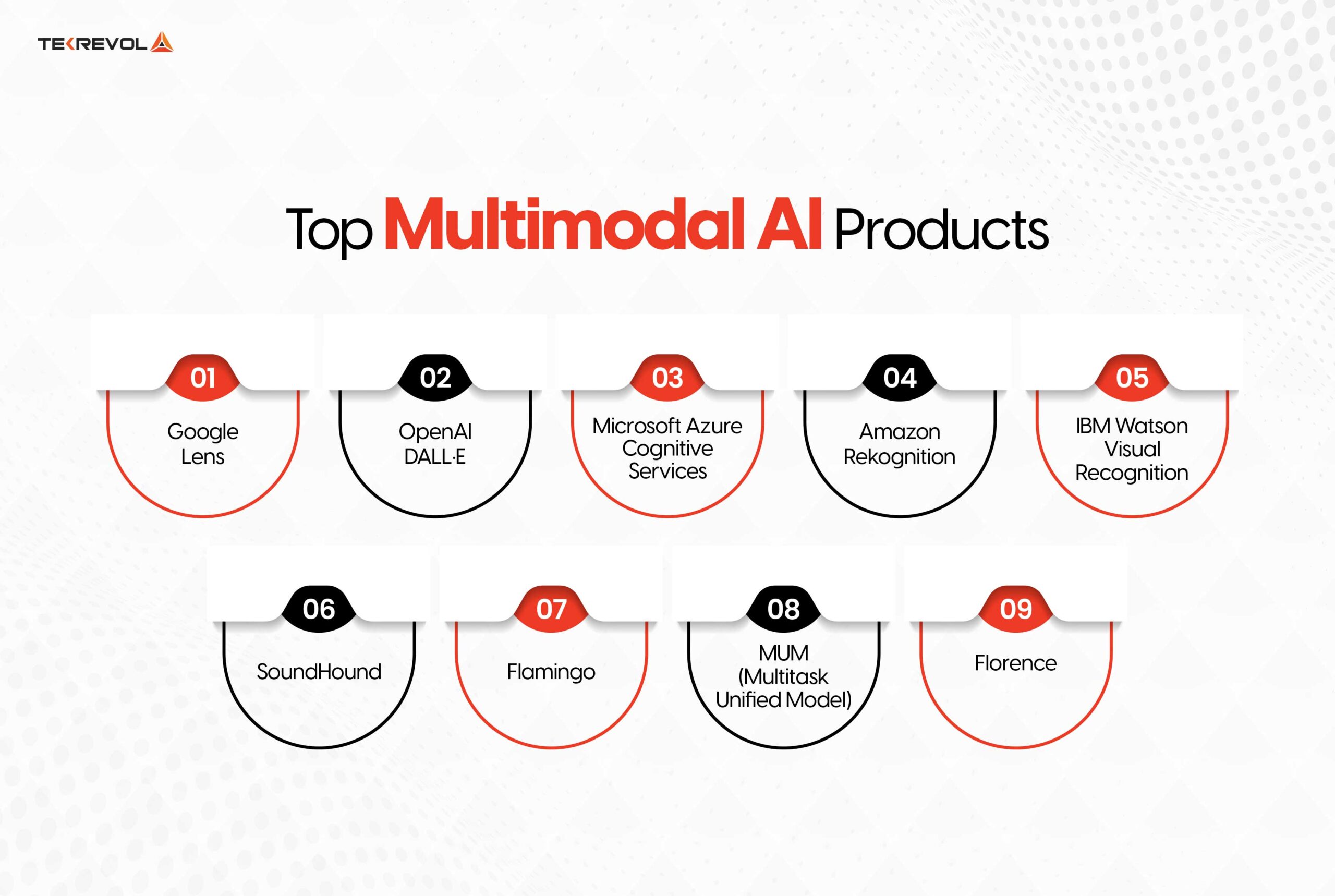

9 Multimodal AI Apps & Products Examples

In the healthcare, retail, and customer service sectors, Multimodal AI has developed systems that use multiple inputs to perform and analyze tasks or make decisions. These tools integrate visualization of visual, audio, and textual data that provides tactical information necessary for efficiency and ideas. Here are some highlights of specific products in the area of multimodal artificial intelligence.

-

Google Lens

Google Lens is an example of a multimodal AI system that takes inputs in the form of images through the camera of a smartphone and maps the inputs to textual data obtained from the internet. By aiming the camera, users can identify plants, scan QR codes, and even translate text. Google Lens is a real-time computer vision and natural language processing (NLP) tool that can identify objects depending on the context.

-

OpenAI DALL·E

DALL·E is an AI model for image generation created by OpenAI, which converts words into unique artwork. DALL·E achieves an understanding of natural language and generates images corresponding to the given description with a high level of detail. This ability to handle multiple modes of data makes it a useful tool in several fields, especially in arts and graphics where it can create a design, art, or marketing image.

-

Microsoft Azure Cognitive Services

The intent behind Azure’s Cognitive Services is a set of multimodal AI solutions catering to speech, vision, text, and language. These services find applications in various business areas such as healthcare, finance, and customer relations services. Using multiple connected data inputs, Azure’s platform can execute advanced, performable tasks such as language translation and document scanning.

-

Amazon Rekognition

Amazon Rekognition is a software service that uses artificial intelligence technology to recognize images and videos. It takes in video streams and integrates the data with user data to provide outputs like facial recognition, object identification, and activity monitoring. This multimodal system is employed in security, surveillance, and e-commerce to enhance products’ individualization and safety measures. It is also capable of detecting the feelings of users due to its capability to identify emotions displayed on faces in real time.

-

IBM Watson Visual Recognition

IBM Watson offers visual recognition tools that integrate with Watson’s natural language processing engine. This multimodal AI system can process visual content like images and videos and combine them with textual descriptions to extract meaningful insights. In industrial applications, Watson Visual Recognition can identify product defects on assembly lines or track items in warehouses through real-time video feeds.

-

SoundHound

SoundHound is an advanced voice AI platform that combines speech recognition with music identification. Users can speak or hum a song to receive information about it instantly. This system relies on audio processing and natural language understanding to deliver accurate results. It’s used widely in smart assistants, automotive voice systems, and entertainment to enhance user interaction through natural, voice-driven commands.

-

Flamingo

Developed by DeepMind, Flamingo is a state-of-the-art multimodal model designed for image-text understanding. By combining text prompts with images, Flamingo can generate captions, answer questions about images, and interpret visual inputs in context. This technology is beneficial in content moderation, digital marketing, and customer support, where understanding both text and visuals is crucial for accurate content handling.

-

MUM (Multitask Unified Model)

Introduced by Google, MUM can process and integrate multiple types of inputs such as text, images, and video to answer complex search queries. It is designed to understand and generate insights across 75 languages and handle cross-lingual tasks. This product is particularly effective for global e-commerce and international SEO, where understanding user intent across languages and media formats is critical.

-

Florence

Florence is a multimodal AI model developed by Microsoft for advanced computer vision tasks. It is particularly adept at combining image recognition with natural language processing, enabling it to identify objects and describe them in natural language. Florence is highly valuable in fields like surveillance, retail inventory management, and industrial automation, where image understanding in context is required for efficient operation.

TekRevol: Leading AI Development Company For Generative AI Solutions

TekRevol is an AI development company specializing in generative AI solutions that drive business performance through automation and intelligent decision-making. We build multimodal AI systems that integrate text, image, video, and audio processing to solve complex problems, optimize workflows, and enhance user experiences.

From content generation to AI-driven operations, TekRevol delivers scalable, tailored solutions to help businesses maximize the power of generative AI.

- Scale Faster with AI-Driven Development

Wrap Up

Multimodal AI is reshaping industries by integrating diverse data types to deliver more precise, efficient, and intelligent solutions. From optimizing decision-making to automating complex workflows, its applications are vast.

As businesses adopt multimodal AI, leveraging benefits like improved accuracy, real-time insights, and operational efficiency will drive growth and innovation.

TekRevol leads this shift, providing the tools and expertise businesses need to integrate generative AI into their operations. Now is the time to embrace AI-driven solutions for the future.